instantiate generators of augmented image batches (and their labels) via.configure random transformations and normalization operations to be done on your image data during training.In Keras this can be done via the class. This helps prevent overfitting and helps the model generalize better. In order to make the most of our few training examples, we will "augment" them via a number of random transformations, so that our model would never see twice the exact same picture.

KERAS DATA AUGMENTATION BEFORE VALIDATION DOWNLOAD

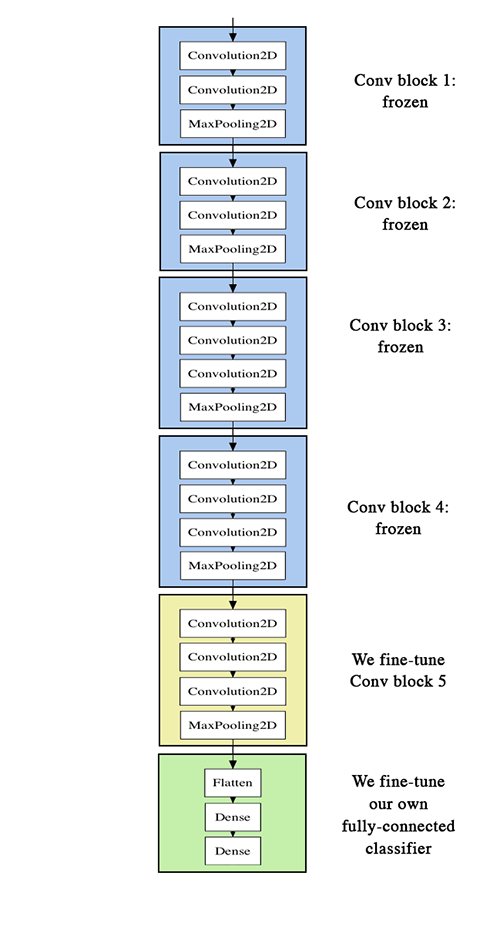

Specifically in the case of computer vision, many pre-trained models (usually trained on the ImageNet dataset) are now publicly available for download and can be used to bootstrap powerful vision models out of very little data.ĭata pre-processing and data augmentation They are the right tool for the job.īut what's more, deep learning models are by nature highly repurposable: you can take, say, an image classification or speech-to-text model trained on a large-scale dataset then reuse it on a significantly different problem with only minor changes, as we will see in this post. Training a convnet from scratch on a small image dataset will still yield reasonable results, without the need for any custom feature engineering. However, convolutional neural networks -a pillar algorithm of deep learning- are by design one of the best models available for most "perceptual" problems (such as image classification), even with very little data to learn from.

Certainly, deep learning requires the ability to learn features automatically from the data, which is generally only possible when lots of training data is available -especially for problems where the input samples are very high-dimensional, like images. While not entirely incorrect, this is somewhat misleading. On the relevance of deep learning for small-data problemsĪ message that I hear often is that "deep learning is only relevant when you have a huge amount of data". In our case, because we restrict ourselves to only 8% of the dataset, the problem is much harder. In the resulting competition, top entrants were able to score over 98% accuracy by using modern deep learning techniques. The current literature suggests machine classifiers can score above 80% accuracy on this task.

For reference, a 60% classifier improves the guessing probability of a 12-image HIP from 1/4096 to 1/459. "In an informal poll conducted many years ago, computer vision experts posited that a classifier with better than 60% accuracy would be difficult without a major advance in the state of the art. dogs competition (with 25,000 training images in total), a bit over two years ago, it came with the following statement: How difficult is this problem? When Kaggle started the cats vs. Being able to make the most out of very little data is a key skill of a competent data scientist. So this is a challenging machine learning problem, but it is also a realistic one: in a lot of real-world use cases, even small-scale data collection can be extremely expensive or sometimes near-impossible (e.g. That is very few examples to learn from, for a classification problem that is far from simple. We also use 400 additional samples from each class as validation data, to evaluate our models. In our examples we will use two sets of pictures, which we got from Kaggle: 1000 cats and 1000 dogs (although the original dataset had 12,500 cats and 12,500 dogs, we just took the first 1000 images for each class). To acquire a few hundreds or thousands of training images belonging to the classes you are interested in, one possibility would be to use the Flickr API to download pictures matching a given tag, under a friendly license. jpg images:ĭata/ train/ dogs/ dog001.jpg dog002.jpg.

0 kommentar(er)

0 kommentar(er)